Elasticsearch is a state-of-the-art full-text search engine you might want to use in your next project. It is based on a NoSQL-like document store and optimized for amazingly fast search queries. A powerful API enables features like fuzzy matching (find ‘Toronto’ when searching for ‘Torronto’), stemming (find ‘race’ when searching for ‘racing’) and n-grams (find ‘spaghetti’ with ‘ghett’).

In this article, I will show you how to set up an Elasticsearch instance using Docker and PHP and how to easily perform queries.

Docker Setup

Before we start the container, we create a network since in the end, we will have two containers communicating with each other:

docker network create -d bridge elasticbridge

For Elasticsearch, there exists an official docker image in Dockerhub. You can start an instance using the following commands:

docker run -d --network=elasticbridge --name elasticsearch -p 9200:9200 -p 9300:9300 -e "discovery.type=single-node" docker.elastic.co/elasticsearch/elasticsearch:6.4.0

If you don’t already have it in your cache, the Elasticsearch image will be downloaded automatically. By using the -d switch, the container is sent to the background and does not block your terminal session. You can always have a look at the container’s output by using the docker logs command:

docker logs elasticsearch

The --network=elasticbridge statement allows the Elasticsearch service to be callable by other containers in the same network.

We have set up the container with port forwarding for the 9200 and 9300 ports so you can communicate with the container’s REST API by sending requests to localhost:9200, for example:

curl -XGET http://127.0.0.1:9200/_cat/health

This command should return the following output if everything has worked so far:

1536999057 08:10:57 docker-cluster green 1 1 0 0 0 0 0 0 - 100.0%

Adding documents

Before we set up a simple PHP application to communicate with Elasticsearch, I will show you how to add documents using the REST API directly over HTTP.

curl -H "Content-Type: application/json" -XPOST "http://127.0.0.1:9200/firstindex/user" -d "{ \"firstname\" : \"Donald\", \"lastname\" : \"Trump\"}"

We use the curl command to send a POST request to the Elasticsearch server. /firstindex specifies that we want to add our document to that specific index (which you can think of as a database) – it is automatically created if it doesn’t exist yet. Furthermore, we add our document to the type user (corresponding to a table in a relational database). The document itself is passed in the JSON format and consists of two fields (firstname and lastname) and their respective values.

To demonstrate the search capabilities, let’s add two more users:

curl -H "Content-Type: application/json" -XPOST "http://127.0.0.1:9200/firstindex/user" -d "{ \"firstname\" : \"Barack\", \"lastname\" : \"Obama\"}"

curl -H "Content-Type: application/json" -XPOST "http://127.0.0.1:9200/firstindex/user" -d "{ \"firstname\" : \"Melania\", \"lastname\" : \"Trump\"}"

Searching Documents

Before creating a more complex query, let’s first verify that our entered data has actually been saved:

curl -XGET "http://127.0.0.1:9200/firstindex/user/_search/?pretty=true"

This will query for all documents of type user in index firstindex. The output (as request by the parameter ?pretty=true) should be formatted properly and be equivalent to the following:

{

"took" : 2,

"timed_out" : false,

"_shards" : {

"total" : 5,

"successful" : 5,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : 3,

"max_score" : 1.0,

"hits" : [

{

"_index" : "firstindex",

"_type" : "user",

"_id" : "F1ZQ3GUBPff1ZX4kkcru",

"_score" : 1.0,

"_source" : {

"firstname" : "Barack",

"lastname" : "Obama"

}

},

{

"_index" : "firstindex",

"_type" : "user",

"_id" : "GFZQ3GUBPff1ZX4kqMrp",

"_score" : 1.0,

"_source" : {

"firstname" : "Melania",

"lastname" : "Trump"

}

},

{

"_index" : "firstindex",

"_type" : "user",

"_id" : "FlZM3GUBPff1ZX4kLcq5",

"_score" : 1.0,

"_source" : {

"firstname" : "Donald",

"lastname" : "Trump"

}

}

]

}

}

As you can see, all three users have been fetched successfully. Elasticsearch also outputs additional information about how long the query took (in milliseconds), how many results it produced and for each retrieved document its index, type and identifier.

Connecting with PHP

For the second part of this article, we will set up a simple frontend with PHP to display the Elasticsearch’s results.

We start by spinning up a container with preinstalled PHP 7.2 and Apache, map it to our local port 8080 and name it php-elastic-frontend. We will mount a local .php file into the container so we can directly modify it without having to restart the container all the time. So first create a new PHP file somewhere in your filesystem with the following content:

<?php phpinfo();

and save it as index.php. Then, run the following docker command:

docker run -d --network=elasticbridge -p 8080:80 --name php-elastic-frontend -v <path to your src folder>:/var/www/html php:7.2-apache

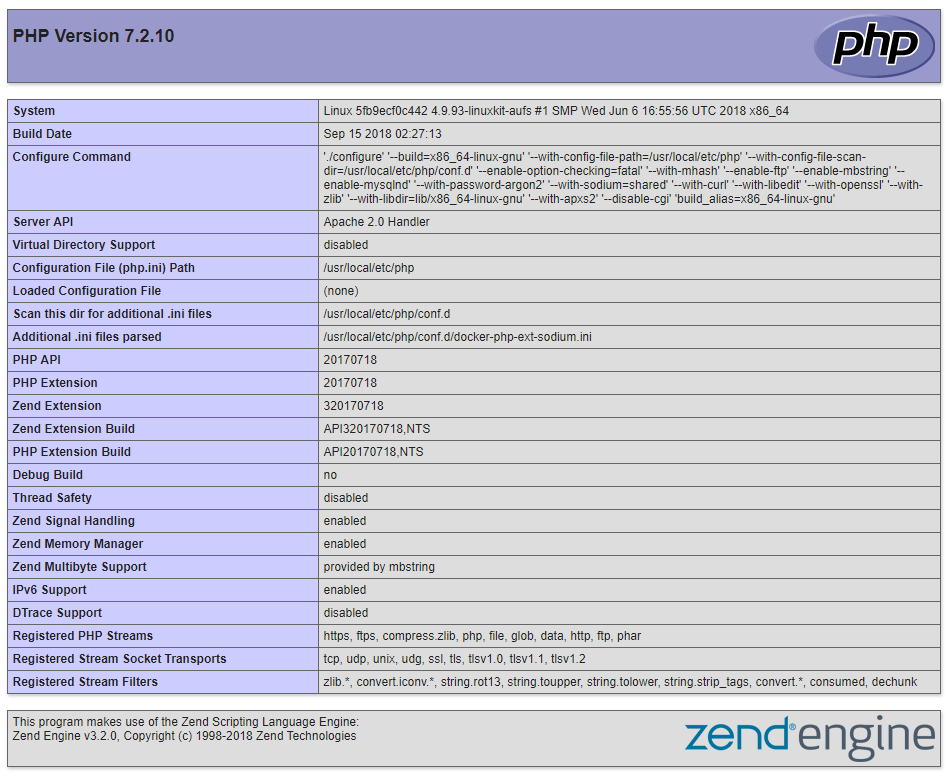

If everything worked, you should be able to browse to http://127.0.0.1:8080 and see the following output:

You can now open the index.php file in your favorite text editor and change it to the following:

<h1>Search Results</h1>

<ul>

<li>First Result</li>

<li>Second Result</li>

</ul>

As you reload your browser, the PHPInfo should be gone and the simple frontend template should become visible.

Setting Up Elasticsearch-PHP

We could use curl to communicate with the Elasticsearch server as we did in the first section. However, it is much more convenient to use the elasticsearch PHP package. We will install it using composer. For a real project, we would set this package up properly via a Dockerfile (stay tuned for another article on that topic), but for a quick solution, we will just connect to our PHP container via SSH and install it there:

docker exec -it php-elastic-frontend /bin/bash

This spawns a new shell in the container and allows us to enter any commands. We will first install composer:

apt-get update ; apt-get install -y wget git ; wget https://getcomposer.org/composer.phar php composer.phar require elasticsearch/elasticsearch

After composer has done its magic, the elasticsearch package (and all of its dependencies) have been downloaded into the vendor/ folder.

We can now change the index.php file to the following (explanations follow):

<?php

require 'vendor/autoload.php';

use Elasticsearch\ClientBuilder;

$hosts = [

'elasticsearch:9200', // IP + Port

];

$client = ClientBuilder::create()

->setHosts($hosts)

->build();

$params = [

'index' => 'firstindex',

'type' => 'user',

'body' => [

'query' => [

'match' => [

'lastname' => 'Trump'

]

]

]

];

$response = $client->search($params);

echo '<pre>', print_r($response, true), '</pre>';

?>

<h1>Search Results</h1>

<ul>

<li>First Result</li>

<li>Second Result</li>

</ul>

Let’s quickly go through this line by line.

require 'vendor/autoload.php';

We import Composer’s autoloader so we don’t have to take care about including each class file individually.

use Elasticsearch\ClientBuilder;

Import the namespace so we can use the ClientBuilder class without explicitly mentioning its namespace.

$hosts = [

'elasticsearch:9200', // IP + Port

];

This configures where our Elasticsearch server is reachable. Since we have configured the two containers to be in the same Docker network, we can directly refer to the container’s name and Docker automatically resolves it to its IP.

$client = ClientBuilder::create()

->setHosts($hosts)

->build();

This instantiates a ClientBuilder object that is used as an interface to the Elasticsearch server.

$params = [

'index' => 'firstindex',

'type' => 'user',

'body' => [

'query' => [

'match' => [

'lastname' => 'Trump'

]

]

]

];

Here we specify the search parameters. For our example, we want to receive all documents from index firstindex of type user, where the document value lastname equals Trump (direct matching).

$response = $client->search($params); echo '<pre>', print_r($response, true), '</pre>';

We execute the query (notice that it is actually not executed in the container running the PHP application but rather sent to the other container running Elasticsearch) and output the results. The <pre> tags make sure that our output is easily readable.

When we open the index.php in our browser again, we can see the following output:

Array

(

[took] => 9

[timed_out] =>

[_shards] => Array

(

[total] => 5

[successful] => 5

[skipped] => 0

[failed] => 0

)

[hits] => Array

(

[total] => 2

[max_score] => 0.6931472

[hits] => Array

(

[0] => Array

(

[_index] => firstindex

[_type] => user

[_id] => bo573GUB5GzvNJfl38JF

[_score] => 0.6931472

[_source] => Array

(

[firstname] => Donald

[lastname] => Trump

)

)

[1] => Array

(

[_index] => firstindex

[_type] => user

[_id] => cY583GUB5GzvNJflIMI-

[_score] => 0.2876821

[_source] => Array

(

[firstname] => Melania

[lastname] => Trump

)

)

)

)

)

You can see that the query produced two results.

Creating a List of Matches

To conclude this article, we will output the matches as a list of first and last names in our HTML template.

First, we remove this line from our index.php since it only served as a proof-of-concept:

echo '<pre>', print_r($response, true), '</pre>';

Next, we will create an array of results from the Elasticsearch output:

$resultNames = array_map(function($item) {

return $item['_source'];

}, $response['hits']['hits']);

We use array_map to transform the output array into just a list of [firstname, lastname] tuples.

Finally, we modify our HTML template:

<h1>Search Results</h1>

<ul>

<?php foreach($resultNames as $resultName): ?>

<li><?php echo implode(' ', $resultName); ?></li>

<?php endforeach; ?>

</ul>

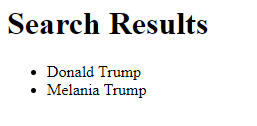

For each search result, we output the first and last name, separated by a space. The <li> tags create an unordered list. Our code should generate the following result:

Outlook

While this seems to be quite some work for a simple text search, we have barely scraped the surface of what is possible with Elasticsearch. In another article, I will show you its advanced searching features and how to create a setup that scales with tremendous amounts of documents, all based on the simple setup discussed here. Stay tuned!

Very useful. Thanks for sharing!